The history of the Internet began with the development of electronic computers in the 1950s. The public was first introduced to the concepts that would lead to the Internet when a message was sent over the ARPANet from computer science Professor Leonard Kleinrock's laboratory at University of California, Los Angeles (UCLA), after the second piece of network equipment was installed at Stanford Research Institute (SRI). Packet switched networks such as ARPANET, Mark I at NPL in the UK, CYCLADES, Merit Network, Tymnet, and Telenet, were developed in the late 1960s and early 1970s using a variety of protocols. The ARPANET in particular led to the development of protocols for internetworking, in which multiple separate networks could be joined together into a network of networks.

In 1982, the Internet protocol suite (TCP/IP) was standardized, and consequently, the concept of a world-wide network of interconnected TCP/IP networks, called the Internet, was introduced. Access to the ARPANET was expanded in 1981 when the National Science Foundation (NSF) developed the Computer Science Network (CSNET) and again in 1986 when NSFNET provided access to supercomputer sites in the United States from research and education organizations. Commercial Internet service providers (ISPs) began to emerge in the late 1980s and early 1990s. The ARPANET was decommissioned in 1990. The Internet was commercialized in 1995 when NSFNET was decommissioned, removing the last restrictions on the use of the Internet to carry commercial traffic.

| Vannevar Bush |

Claude Shannon |

J.C.R.licklider |

| Paul Baran |

Ted Nelson |

Leonard Kleinrock |

| Lawrence Robert |

Steve Crocker |

Jon Postel |

| Vinton Cerf |

Robert Kahn |

Christian Huitema |

| Brian Carpenter |

Tim Berners-Lee |

Mark Andreesen |

Since the mid-1990s, the Internet has had a revolutionary impact on culture and commerce, including the rise of near-instant communication by

electronic mail,

instant messaging,

Voice over Internet Protocol (VoIP) "phone calls",

two-way interactive video calls, and the

World Wide Web with its

discussion forums,

blogs,

social networking, and

online shopping sites. The research and education community continues to develop and use advanced networks such as NSF's

very high speed Backbone Network Service (vBNS),

Internet2, and

National LambdaRail. Increasing amounts of data are transmitted at higher and higher speeds over fiber optic networks operating at 1-Gbit/s, 10-Gbit/s, or more. The Internet's takeover of the global communication landscape was almost instant in historical terms: it only communicated 1% of the information flowing through two-way

telecommunications networks in the year 1993, already 51% by 2000, and more than 97% of the telecommunicated information by 2007.

[1] Today the Internet continues to grow, driven by ever greater amounts of online information, commerce, entertainment, and

social networking.

The telegraph system is the first fully digital communication system. Thus the Internet has precursors, such as the

telegraph system, that date back to the 19th century, more than a century before the digital Internet became widely used in the second half of the 1990s. The concept of

data communication – transmitting data between two different places, connected via some kind of electromagnetic medium, such as radio or an electrical wire –

predates the introduction of the first computers. Such communication systems were typically limited to point to point communication between two end devices.

Telegraph systems and

telex machines can be considered early precursors of this kind of communication.

Fundamental theoretical work in

data transmission and

information theory was developed by

Claude Shannon,

Harry Nyquist, and

Ralph Hartley, during the early 20th century.

Early computers used the technology available at the time to allow communication between the central processing unit and remote terminals. As the technology evolved, new systems were devised to allow communication over longer distances (for terminals) or with higher speed (for interconnection of local devices) that were necessary for the

mainframe computer model. Using these technologies made it possible to exchange data (such as files) between remote computers. However, the point to point communication model was limited, as it did not allow for direct communication between any two arbitrary systems; a physical link was necessary. The technology was also deemed as inherently unsafe for strategic and military use, because there were no alternative paths for the communication in case of an enemy attack.

Three terminals and an ARPA

A fundamental pioneer in the call for a global network,

J. C. R. Licklider, articulated the ideas in his January 1960 paper,

Man-Computer Symbiosis.

"A network of such [computers], connected to one another by wide-band communication lines [which provided] the functions of present-day libraries together with anticipated advances in information storage and retrieval and [other] symbiotic functions."

In August 1962, Licklider and Welden Clark published the paper "On-Line Man Computer Communication", which was one of the first descriptions of a networked future.

In October 1962, Licklider was hired by

Jack Ruina as Director of the newly established

Information Processing Techniques Office (IPTO) within

DARPA, with a mandate to interconnect the

United States Department of Defense's main computers at Cheyenne Mountain, the Pentagon, and SAC HQ. There he formed an informal group within DARPA to further computer research. He began by writing memos describing a distributed network to the IPTO staff, whom he called "Members and Affiliates of the Intergalactic Computer Network". As part of the information processing office's role, three network terminals had been installed: one for

System Development Corporation in

Santa Monica, one for

Project Genie at the

University of California, Berkeley and one for the

Compatible Time-Sharing System project at the

Massachusetts Institute of Technology (MIT). Licklider's identified need for inter-networking would be made obvious by the apparent waste of resources this caused.

"For each of these three terminals, I had three different sets of user commands. So if I was talking online with someone at S.D.C. and I wanted to talk to someone I knew at Berkeley or M.I.T. about this, I had to get up from the S.D.C. terminal, go over and log into the other terminal and get in touch with them. [...] I said, it's obvious what to do (But I don't want to do it): If you have these three terminals, there ought to be one terminal that goes anywhere you want to go where you have interactive computing. That idea is the ARPAnet."

Although he left the IPTO in 1964, five years before the ARPANET went live, it was his vision of universal networking that provided the impetus that led his successors such as

Lawrence Roberts and

Robert Taylor to further the ARPANET development. Licklider later returned to lead the IPTO in 1973 for two years.

[4]

Packet switching

At the tip of the problem lay the issue of connecting separate physical networks to form one logical network. During the 1960s,

Paul Baran (

RAND Corporation) produced a study of survivable networks for the US military. Information transmitted across Baran's network would be divided into what he called 'message-blocks'. Independently,

Donald Davies (

National Physical Laboratory, UK), proposed and developed a similar network based on what he called packet-switching, the term that would ultimately be adopted.

Leonard Kleinrock (MIT) developed a mathematical theory behind this technology. Packet-switching provides better bandwidth utilization and response times than the traditional circuit-switching technology used for telephony, particularly on resource-limited interconnection links.

[6]

Packet switching is a rapid

store and forward networking design that divides messages up into arbitrary packets, with routing decisions made per-packet. Early networks used

message switched systems that required rigid routing structures prone to

single point of failure. This led Tommy Krash and Paul Baran's U.S. military funded research to focus on using message-blocks to include network redundancy.

[7] The widespread urban legend that the Internet was designed to resist a nuclear attack likely arose as a result of Baran's earlier work on packet switching, which did focus on redundancy in the face of a nuclear "holocaust."

[8][9]

Networks that led to the Internet

ARPANET

Promoted to the head of the information processing office at

DARPA, Robert Taylor intended to realize Licklider's ideas of an interconnected networking system. Bringing in

Larry Roberts from MIT, he initiated a project to build such a network. The first ARPANET link was established between the

University of California, Los Angeles (

UCLA) and the

Stanford Research Institute at 22:30 hours on October 29, 1969.

"We set up a telephone connection between us and the guys at SRI ...", Kleinrock ... said in an interview: "We typed the L and we asked on the phone,

- "Do you see the L?"

- "Yes, we see the L," came the response.

- We typed the O, and we asked, "Do you see the O."

- "Yes, we see the O."

- Then we typed the G, and the system crashed ...

Yet a revolution had begun" ....[10]

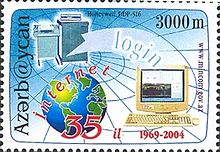

35 Years of the Internet, 1969-2004. Stamp of Azerbaijan, 2004.

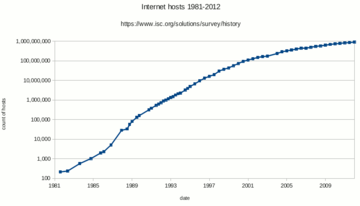

By December 5, 1969, a 4-node network was connected by adding the

University of Utah and the

University of California, Santa Barbara. Building on ideas developed in

ALOHAnet, the ARPANET grew rapidly. By 1981, the number of hosts had grown to 213, with a new host being added approximately every twenty days.

[11][12]

ARPANET development was centered around the

Request for Comments (RFC) process, still used today for proposing and distributing Internet Protocols and Systems.

RFC 1, entitled "Host Software", was written by

Steve Crocker from the

University of California, Los Angeles, and published on April 7, 1969. These early years were documented in the 1972 film

Computer Networks: The Heralds of Resource Sharing.

ARPANET became the technical core of what would become the Internet, and a primary tool in developing the technologies used. The early ARPANET used the

Network Control Program (NCP, sometimes Network Control Protocol) rather than

TCP/IP. On January 1, 1983, known as

flag day, NCP on the ARPANET was replaced by the more flexible and powerful family of TCP/IP protocols, marking the start of the modern Internet.

[13]

International collaborations on ARPANET were sparse. For various political reasons, European developers were concerned with developing the

X.25 networks. Notable exceptions were the

Norwegian Seismic Array (

NORSAR) in 1972, followed in 1973 by Sweden with satellite links to the

Tanum Earth Station and

Peter Kirstein's research group in the UK, initially at the Institute of Computer Science, London University and later at

University College London.

[14]

NPL

In 1965,

Donald Davies of the

National Physical Laboratory (United Kingdom) proposed a national data network based on packet-switching. The proposal was not taken up nationally, but by 1970 he had designed and built the Mark I packet-switched network to meet the needs of the multidisciplinary laboratory and prove the technology under operational conditions.

[15] By 1976 12 computers and 75 terminal devices were attached and more were added until the network was replaced in 1986.

Merit Network

The

Merit Network[16] was formed in 1966 as the Michigan Educational Research Information Triad to explore computer networking between three of Michigan's public universities as a means to help the state's educational and economic development.

[17] With initial support from the

State of Michigan and the

National Science Foundation (NSF), the packet-switched network was first demonstrated in December 1971 when an interactive host to host connection was made between the

IBM mainframe computer systems at the

University of Michigan in

Ann Arbor and

Wayne State University in

Detroit.

[18] In October 1972 connections to the

CDC mainframe at

Michigan State University in

East Lansing completed the triad. Over the next several years in addition to host to host interactive connections the network was enhanced to support terminal to host connections, host to host batch connections (remote job submission, remote printing, batch file transfer), interactive file transfer, gateways to the

Tymnet and

Telenet public data networks,

X.25 host attachments, gateways to X.25 data networks,

Ethernet attached hosts, and eventually

TCP/IP and additional

public universities in Michigan join the network.

[18][19] All of this set the stage for Merit's role in the

NSFNET project starting in the mid-1980s.

CYCLADES

The

CYCLADES packet switching network was a French research network designed and directed by

Louis Pouzin. First demonstrated in 1973, it was developed to explore alternatives to the initial ARPANET design and to support network research generally. It was the first network to make the hosts responsible for the reliable delivery of data, rather than the network itself, using

unreliable datagrams and associated end-to-end protocol mechanisms.

[20][21]

X.25 and public data networks

1974

ABC interview with

Arthur C. Clarke, in which he describes a future of ubiquitous networked personal computers.

Based on ARPA's research, packet switching network standards were developed by the

International Telecommunication Union (ITU) in the form of X.25 and related standards. While using

packet switching, X.25 is built on the concept of virtual circuits emulating traditional telephone connections. In 1974, X.25 formed the basis for the SERCnet network between British academic and research sites, which later became

JANET. The initial ITU Standard on X.25 was approved in March 1976.

[22]

The

British Post Office,

Western Union International and

Tymnet collaborated to create the first international packet switched network, referred to as the

International Packet Switched Service (IPSS), in 1978. This network grew from Europe and the US to cover Canada, Hong Kong and Australia by 1981. By the 1990s it provided a worldwide networking infrastructure.

[23]

Unlike ARPANET, X.25 was commonly available for business use.

Telenet offered its Telemail electronic mail service, which was also targeted to enterprise use rather than the general email system of the ARPANET.

The first public dial-in networks used asynchronous

TTY terminal protocols to reach a concentrator operated in the public network. Some networks, such as

CompuServe, used X.25 to multiplex the terminal sessions into their packet-switched backbones, while others, such as

Tymnet, used proprietary protocols. In 1979,

CompuServe became the first service to offer

electronic mail capabilities and technical support to personal computer users. The company broke new ground again in 1980 as the first to offer

real-time chat with its

CB Simulator. Other major dial-in networks were

America Online (AOL) and

Prodigy that also provided communications, content, and entertainment features. Many

bulletin board system (BBS) networks also provided on-line access, such as

FidoNet which was popular amongst hobbyist computer users, many of them

hackers and

amateur radio operators.

[citation needed]

UUCP and Usenet

Main articles:

UUCP and

Usenet

In 1979, two students at

Duke University,

Tom Truscott and

Jim Ellis, came up with the idea of using simple

Bourne shell scripts to transfer news and messages on a serial line

UUCP connection with nearby

University of North Carolina at Chapel Hill. Following public release of the software, the mesh of UUCP hosts forwarding on the Usenet news rapidly expanded. UUCPnet, as it would later be named, also created gateways and links between

FidoNet and dial-up BBS hosts. UUCP networks spread quickly due to the lower costs involved, ability to use existing leased lines,

X.25 links or even

ARPANET connections, and the lack of strict use policies (commercial organizations who might provide bug fixes) compared to later networks like

CSNET and

Bitnet. All connects were local. By 1981 the number of UUCP hosts had grown to 550, nearly doubling to 940 in 1984. –

Sublink Network, operating since 1987 and officially founded in Italy in 1989, based its interconnectivity upon UUCP to redistribute mail and news groups messages throughout its Italian nodes (about 100 at the time) owned both by private individuals and small companies.

Sublink Network represented possibly one of the first examples of the internet technology becoming progress through popular diffusion.

[24]

Merging the networks and creating the Internet (1973–90)

TCP/IP

Map of the

TCP/IP test network in February 1982

With so many different network methods, something was needed to unify them.

Robert E. Kahn of

DARPA and

ARPANET recruited

Vinton Cerf of

Stanford University to work with him on the problem. By 1973, they had worked out a fundamental reformulation, where the differences between network protocols were hidden by using a common

internetwork protocol, and instead of the network being responsible for reliability, as in the ARPANET, the hosts became responsible. Cerf credits

Hubert Zimmermann, Gerard LeLann and

Louis Pouzin (designer of the

CYCLADES network) with important work on this design.

[25]

The specification of the resulting protocol,

RFC 675 – Specification of Internet Transmission Control Program, by Vinton Cerf, Yogen Dalal and Carl Sunshine, Network Working Group, December 1974, contains the first attested use of the term

internet, as a shorthand for

internetworking; later RFCs repeat this use, so the word started out as an

adjective rather than the

noun it is today.

With the role of the network reduced to the bare minimum, it became possible to join almost any networks together, no matter what their characteristics were, thereby solving Kahn's initial problem. DARPA agreed to fund development of prototype software, and after several years of work, the first demonstration of a gateway between the

Packet Radio network in the SF Bay area and the ARPANET was conducted by the

Stanford Research Institute. On November 22, 1977 a three network demonstration was conducted including the ARPANET, the SRI's

Packet Radio Van on the Packet Radio Network and the Atlantic Packet Satellite network.

[26][27]

Stemming from the first specifications of TCP in 1974,

TCP/IP emerged in mid-late 1978 in nearly final form. By 1981, the associated standards were published as

RFCs 791, 792 and 793 and adopted for use. DARPA sponsored or encouraged the development of TCP/IP implementations for many operating systems and then scheduled a migration of all hosts on all of its packet networks to TCP/IP. On January 1, 1983, known as

flag day, TCP/IP protocols became the only approved protocol on the ARPANET, replacing the earlier

NCP protocol.

[28]

From ARPANET to NSFNET

Main articles:

ARPANET and

NSFNET

After the ARPANET had been up and running for several years, ARPA looked for another agency to hand off the network to; ARPA's primary mission was funding cutting edge research and development, not running a communications utility. Eventually, in July 1975, the network had been turned over to the

Defense Communications Agency, also part of the

Department of Defense. In 1983, the

U.S. military portion of the ARPANET was broken off as a separate network, the

MILNET. MILNET subsequently became the unclassified but military-only

NIPRNET, in parallel with the SECRET-level

SIPRNET and

JWICS for TOP SECRET and above. NIPRNET does have controlled security gateways to the public Internet.

The networks based on the ARPANET were government funded and therefore restricted to noncommercial uses such as research; unrelated commercial use was strictly forbidden. This initially restricted connections to military sites and universities. During the 1980s, the connections expanded to more educational institutions, and even to a growing number of companies such as

Digital Equipment Corporation and

Hewlett-Packard, which were participating in research projects or providing services to those who were.

Several other branches of the

U.S. government, the

National Aeronautics and Space Administration (NASA), the

National Science Foundation (NSF), and the

Department of Energy (DOE) became heavily involved in Internet research and started development of a successor to ARPANET. In the mid-1980s, all three of these branches developed the first Wide Area Networks based on TCP/IP. NASA developed the

NASA Science Network, NSF developed

CSNET and DOE evolved the

Energy Sciences Network or ESNet.

T3 NSFNET Backbone, c. 1992

NASA developed the TCP/IP based NASA Science Network (NSN) in the mid-1980s, connecting space scientists to data and information stored anywhere in the world. In 1989, the

DECnet-based Space Physics Analysis Network (SPAN) and the TCP/IP-based NASA Science Network (NSN) were brought together at NASA Ames Research Center creating the first multiprotocol wide area network called the NASA Science Internet, or NSI. NSI was established to provide a totally integrated communications infrastructure to the NASA scientific community for the advancement of earth, space and life sciences. As a high-speed, multiprotocol, international network, NSI provided connectivity to over 20,000 scientists across all seven continents.

In 1981 NSF supported the development of the

Computer Science Network (CSNET). CSNET connected with ARPANET using TCP/IP, and ran TCP/IP over

X.25, but it also supported departments without sophisticated network connections, using automated dial-up mail exchange.

Its experience with CSNET lead NSF to use TCP/IP when it created

NSFNET, a 56 kbit/s

backbone established in 1986, to supported the NSF sponsored

supercomputing centers. The NSFNET Project also provided support for the creation of regional research and education networks in the United States and for the connection of university and college campus networks to the regional networks.

[29] The use of NSFNET and the regional networks was not limited to supercomputer users and the 56 kbit/s network quickly became overloaded. NSFNET was upgraded to 1.5 Mbit/s in 1988 under a cooperative agreement with the

Merit Network in partnership with

IBM,

MCI, and the

State of Michigan. The existence of NSFNET and the creation of

Federal Internet Exchanges (FIXes) allowed the ARPANET to be decommissioned in 1990. NSFNET was expanded and upgraded to 45 Mbit/s in 1991, and was decommissioned in 1995 when it was replaced by backbones operated by several commercial

Internet Service Providers.

Transition towards the Internet

The term "internet" was adopted in the first RFC published on the TCP protocol (

RFC 675:

[30] Internet Transmission Control Program, December 1974) as an abbreviation of the term

internetworking and the two terms were used interchangeably. In general, an

internet was any network using TCP/IP. It was around the time when ARPANET was interlinked with

NSFNET in the late 1980s, that the term was used as the name of the network, Internet,

[31] being the large and global TCP/IP network.

As interest in widespread networking grew and new applications for it were developed, the Internet's technologies spread throughout the rest of the world. The network-agnostic approach in TCP/IP meant that it was easy to use any existing network infrastructure, such as the

IPSS X.25 network, to carry Internet traffic. In 1984, University College London replaced its transatlantic satellite links with TCP/IP over IPSS.

[32]

Many sites unable to link directly to the Internet started to create simple gateways to allow transfer of email, at that time the most important application. Sites which only had intermittent connections used

UUCP or

FidoNet and relied on the gateways between these networks and the Internet. Some gateway services went beyond simple email peering, such as allowing access to

FTP sites via UUCP or email.

[33]

Finally, the Internet's remaining centralized routing aspects were removed. The

EGP routing protocol was replaced by a new protocol, the

Border Gateway Protocol (BGP). This turned the Internet into a meshed topology and moved away from the centric architecture which ARPANET had emphasized. In 1994,

Classless Inter-Domain Routing was introduced to support better conservation of address space which allowed use of

route aggregation to decrease the size of

routing tables.

[34]

TCP/IP goes global (1989–2010)

CERN, the European Internet, the link to the Pacific and beyond

Between 1984 and 1988

CERN began installation and operation of

TCP/IP to interconnect its major internal computer systems, workstations, PCs and an accelerator control system. CERN continued to operate a limited self-developed system (CERNET) internally and several incompatible (typically proprietary) network protocols externally. There was considerable resistance in Europe towards more widespread use of

TCP/IP, and the CERN TCP/IP intranets remained isolated from the Internet until 1989.

In 1988, Daniel Karrenberg, from

Centrum Wiskunde & Informatica (CWI) in

Amsterdam, visited Ben Segal,

CERN's TCP/IP Coordinator, looking for advice about the transition of the European side of the UUCP Usenet network (much of which ran over X.25 links) over to TCP/IP. In 1987, Ben Segal had met with

Len Bosack from the then still small company

Cisco about purchasing some TCP/IP routers for CERN, and was able to give Karrenberg advice and forward him on to Cisco for the appropriate hardware. This expanded the European portion of the Internet across the existing UUCP networks, and in 1989 CERN opened its first external TCP/IP connections.

[35] This coincided with the creation of Réseaux IP Européens (

RIPE), initially a group of IP network administrators who met regularly to carry out co-ordination work together. Later, in 1992, RIPE was formally registered as a

cooperative in Amsterdam.

At the same time as the rise of internetworking in Europe, ad hoc networking to ARPA and in-between Australian universities formed, based on various technologies such as X.25 and

UUCPNet. These were limited in their connection to the global networks, due to the cost of making individual international UUCP dial-up or X.25 connections. In 1989, Australian universities joined the push towards using IP protocols to unify their networking infrastructures.

AARNet was formed in 1989 by the

Australian Vice-Chancellors' Committee and provided a dedicated IP based network for Australia.

The Internet began to penetrate Asia in the late 1980s. Japan, which had built the UUCP-based network

JUNET in 1984, connected to

NSFNET in 1989. It hosted the annual meeting of the

Internet Society, INET'92, in

Kobe.

Singapore developed TECHNET in 1990, and

Thailand gained a global Internet connection between Chulalongkorn University and UUNET in 1992.

[36]

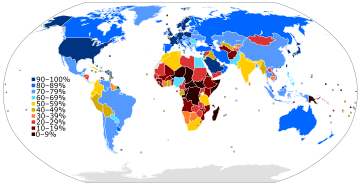

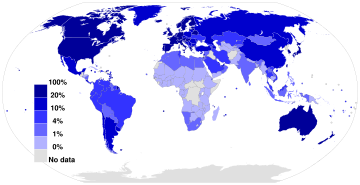

Global digital divide

While developed countries with technological infrastructures were joining the Internet, developing countries began to experience a

digital divide separating them from the Internet. On an essentially continental basis, they are building organizations for Internet resource administration and sharing operational experience, as more and more transmission facilities go into place.

Africa

At the beginning of the 1990s, African countries relied upon X.25

IPSS and 2400 baud modem UUCP links for international and internetwork computer communications.

In August 1995, InfoMail Uganda, Ltd., a privately held firm in Kampala now known as InfoCom, and NSN Network Services of Avon, Colorado, sold in 1997 and now known as Clear Channel Satellite, established Africa's first native TCP/IP high-speed satellite Internet services. The data connection was originally carried by a C-Band RSCC Russian satellite which connected InfoMail's Kampala offices directly to NSN's MAE-West point of presence using a private network from NSN's leased ground station in New Jersey. InfoCom's first satellite connection was just 64 kbit/s, serving a Sun host computer and twelve US Robotics dial-up modems.

In 1996, a

USAID funded project, the

Leland Initiative, started work on developing full Internet connectivity for the continent.

Guinea, Mozambique,

Madagascar and

Rwanda gained

satellite earth stations in 1997, followed by

Côte d'Ivoire and

Benin in 1998.

Africa is building an Internet infrastructure.

AfriNIC, headquartered in

Mauritius, manages IP address allocation for the continent. As do the other Internet regions, there is an operational forum, the Internet Community of Operational Networking Specialists.

[40]

There are many programs to provide high-performance transmission plant, and the western and southern coasts have undersea optical cable. High-speed cables join North Africa and the Horn of Africa to intercontinental cable systems. Undersea cable development is slower for East Africa; the original joint effort between

New Partnership for Africa's Development (NEPAD) and the East Africa Submarine System (Eassy) has broken off and may become two efforts.

[41]

Asia and Oceania

The

Asia Pacific Network Information Centre (APNIC), headquartered in Australia, manages IP address allocation for the continent. APNIC sponsors an operational forum, the Asia-Pacific Regional Internet Conference on Operational Technologies (APRICOT).

[42]

In 1991, the People's Republic of China saw its first

TCP/IP college network,

Tsinghua University's TUNET. The PRC went on to make its first global Internet connection in 1994, between the Beijing Electro-Spectrometer Collaboration and

Stanford University's Linear Accelerator Center. However, China went on to implement its own digital divide by implementing a country-wide

content filter.

[43]

Latin America

As with the other regions,

the Latin American and Caribbean Internet Addresses Registry (LACNIC) manages the IP address space and other resources for its area. LACNIC, headquartered in Uruguay, operates DNS root, reverse DNS, and other key services.

Opening the network to commerce

The interest in commercial use of the Internet became a hotly debated topic. Although commercial use was forbidden, the exact definition of commercial use could be unclear and subjective.

UUCPNet and the X.25 IPSS had no such restrictions, which would eventually see the official barring of UUCPNet use of

ARPANET and

NSFNET connections. Some UUCP links still remained connecting to these networks however, as administrators cast a blind eye to their operation.

During the late 1980s, the first

Internet service provider (ISP) companies were formed. Companies like

PSINet,

UUNET,

Netcom, and

Portal Software were formed to provide service to the regional research networks and provide alternate network access, UUCP-based email and

Usenet News to the public. The first commercial dialup ISP in the United States was

The World, which opened in 1989.

[45]

In 1992, the U.S. Congress passed the Scientific and Advanced-Technology Act,

42 U.S.C. § 1862(g), which allowed NSF to support access by the research and education communities to computer networks which were not used exclusively for research and education purposes, thus permitting NSFNET to interconnect with commercial networks.

[46][47] This caused controversy within the research and education community, who were concerned commercial use of the network might lead to an Internet that was less responsive to their needs, and within the community of commercial network providers, who felt that government subsidies were giving an unfair advantage to some organizations.

[48]

By 1990, ARPANET had been overtaken and replaced by newer networking technologies and the project came to a close. New network service providers including

PSINet,

Alternet, CERFNet, ANS CO+RE, and many others were offering network access to commercial customers.

NSFNET was no longer the de facto backbone and exchange point for Internet. The

Commercial Internet eXchange (CIX),

Metropolitan Area Exchanges (MAEs), and later

Network Access Points (NAPs) were becoming the primary interconnections between many networks. The final restrictions on carrying commercial traffic ended on April 30, 1995 when the National Science Foundation ended its sponsorship of the NSFNET Backbone Service and the service ended.

[49][50] NSF provided initial support for the NAPs and interim support to help the regional research and education networks transition to commercial ISPs. NSF also sponsored the

very high speed Backbone Network Service (vBNS) which continued to provide support for the supercomputing centers and research and education in the United States.

Globalization and Internet governance in the 21st century

Since the 1990s, the

Internet's governance and organization has been of global importance to governments, commerce, civil society, and individuals. The organizations which held control of certain technical aspects of the Internet were the successors of the old ARPANET oversight and the current decision-makers in the day-to-day technical aspects of the network. While recognized as the administrators of certain aspects of the Internet, their roles and their decision making authority are limited and subject to increasing international scrutiny and increasing objections. These objections have led to the ICANN removing themselves from relationships with first the

University of Southern California in 2000,

[76] and finally in September 2009, gaining autonomy from the US government by the ending of its longstanding agreements, although some contractual obligations with the U.S. Department of Commerce continued.

[77][78][79]

The IETF, with financial and organizational support from the Internet Society, continues to serve as the Internet's ad-hoc standards body and issues

Request for Comments.

In November 2005, the

World Summit on the Information Society, held in

Tunis, called for an

Internet Governance Forum (IGF) to be convened by

United Nations Secretary General. The IGF opened an ongoing, non-binding conversation among stakeholders representing governments, the private sector, civil society, and the technical and academic communities about the future of Internet governance. The first IGF meeting was held in October/November 2006 with follow up meetings annually thereafter.

[80] Since WSIS, the term "Internet governance" has been broadened beyond narrow technical concerns to include a wider range of Internet-related policy issues

![(2S,5R)-2-ethyl-1,6-dioxaspiro[4.4]nonane](http://upload.wikimedia.org/wikipedia/commons/thumb/8/8e/2S%2C5R-chalcogran-skeletal.svg/130px-2S%2C5R-chalcogran-skeletal.svg.png)